Create the Pi cluster

This is the final step for this homelab.

Configure

Set some config options in the environment

export CLUSTER_NAME=lm-pi-homelab # or whatever

export CONTROL_PLANE_MACHINE_COUNT=1

# try not to go crazy with this one, consider your personal capacity.

# nodes tend to need 2GB of RAM to boot, with about 4GB considered enough

# to run apps. MicroVMs can be over-committed, so on a board with 4GB RAM

# you'll want no more than 2 nodes (control plane included).

export WORKER_MACHINE_COUNT=3

export KUBERNETES_VERSION=1.21.8

export MVM_KERNEL_IMAGE=docker.io/claudiaberesford/flintlock-kernel-arm:5.10.77

export MVM_ROOT_IMAGE=docker.io/claudiaberesford/capmvm-kubernetes-arm:1.21.8

CAPMVM will use kube-vip to assign a virtual IP to the cluster.

Choose an address from outside your router's DHCP pool. For example, if the pool runs from 192.168.1.64 to .244

you'd use use 192.168.1.63.

If you copied Claudia's demo setup, then use an IP from outside that range instead.

export CONTROL_PLANE_VIP="192.168.1.63" # update to suit your network

Generate

Use clusterctl to generate a cluster manifest:

clusterctl generate cluster -i microvm:$CAPMVM_VERSION -f flannel $CLUSTER_NAME > cluster.yaml

We am using flannel for the cluster's CNI.

There is also a cilium flavour available, or you can leave the -f foobar flag off and

apply your own choice of CNI after the fact.

If you need to enable more kernel features for your CNI, you can supply a custom

image in the _IMAGE env vars at the top of this page. The Liquid Metal images

are here if you want to use them as a base.

Open the cluster.yaml file and add the addresses of your boards' flintlockd

servers under the MicrovmCluster's spec.placement.staticPool.hosts.

While you are there you can also add a public SSH key if you want.

Expand to see host address changes

Because we are on ARM, the kernel binary in the image will be at a different

location (it defaults to x86's boot/vmlinux). Claudia: I say "will be" like I didn't

put it there. Sorry I have not had time to rebuild and move that yet, on my list.

We need to edit the section of the manifest which points to that binary (at some point these more fiddly bits of config will go).

Edit the 2/two MicrovmMachineTemplate specs at spec.template.spec.kernel.filename

to be boot/image:

Expand to see kernel binary path changes

Once you have made those changes, save and close the file.

Apply

Once you are happy with the manifest, apply it to your management cluster:

kubectl apply -f cluster.yaml

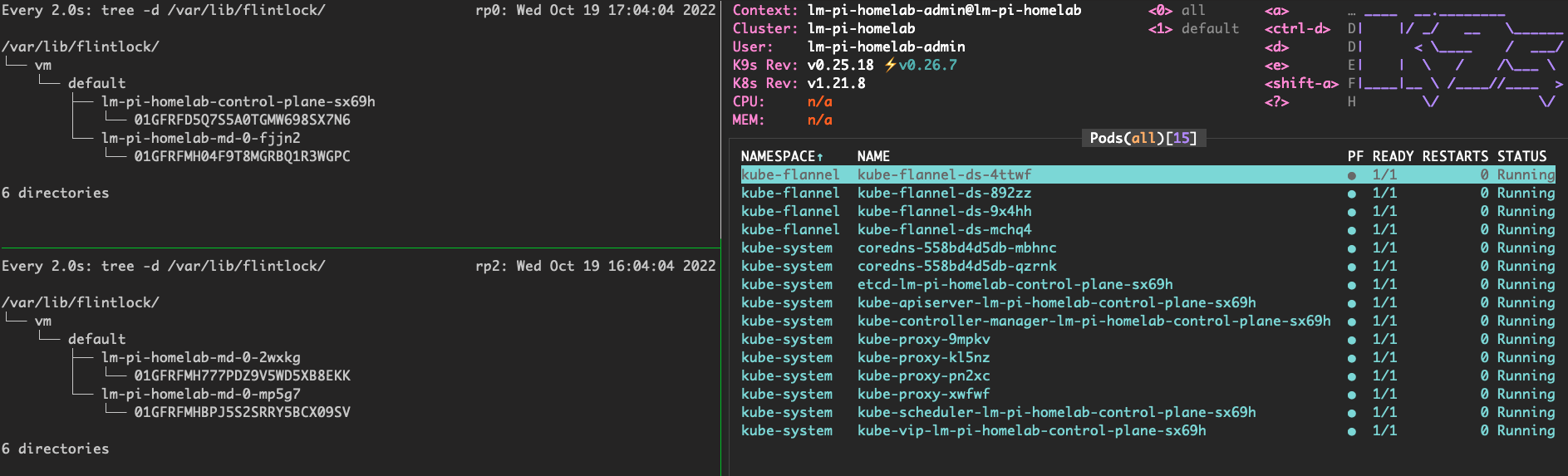

Output

Use

After a moment, you can fetch the MicroVM workload cluster's kubeconfig from

your management cluster. This kubeconfig is written to a secret by CAPI:

kubectl get secret $CLUSTER_NAME-kubeconfig -o json | jq -r .data.value | base64 -d > config.yaml

With that kubeconfig you can target the Liquid Metal cluster with kubectl:

kubectl --kubeconfig config.yaml get nodes

This may not return anything for a few moments; you will need to wait for the MicroVMs

to start and for the cluster control-plane to then be bootstrapped.

Prepend the command with watch and eventually (<=5m) you

will see the errors stop and the cluster come up.

An expected error for the first 2-3 minutes is:

Unable to connect to the server: dial tcp 192.168.1.63:6443: connect: no route to host

Output

From there you can use the cluster as you would normally. The management kind cluster can

be thrown away if you like.

Delete

When/if you want to delete your Raspberry Pi cluster, DO NOT kubectl delete -f cluster.yaml.

Instead run:

kubectl delete cluster $CLUSTER_NAME